Reinventing Technical Interviews in the Age of Artificial Intelligence

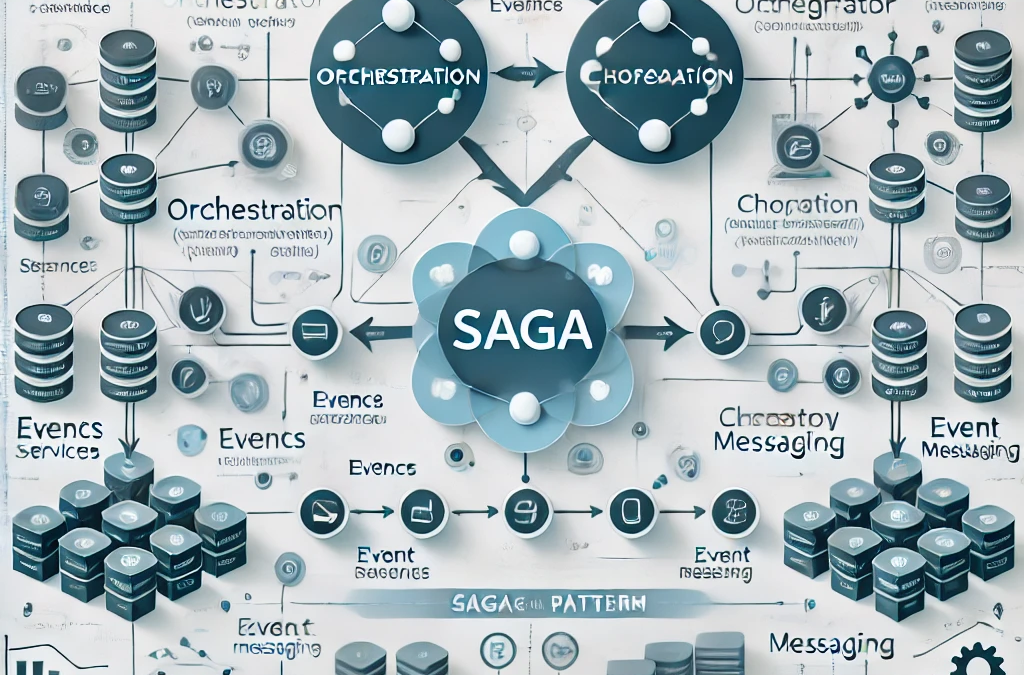

In today’s world of distributed architectures, managing transactions that span multiple microservices is one of the greatest challenges. This is where the SAGA Pattern comes into play, a key solution for ensuring eventual consistency in distributed systems.

What is the SAGA Pattern?

The SAGA pattern is an approach for managing distributed transactions in microservices architectures. Instead of using a single transaction that locks resources across all the services involved, as in the traditional two-phase commit (2PC) approach, the SAGA pattern divides the transaction into a sequence of steps or subtransactions, each with a compensating action in case of failure.

What is Two-Phase Commit (2PC)?

Two-Phase Commit (2PC) is a protocol used to ensure strong consistency in distributed transactions. In 2PC, a transaction involving multiple nodes or services follows two phases:

- Prepare phase: All participants receive a preparation request, and each responds if they can commit the changes or not.

- Commit phase: If all participants are ready, a commit request is sent to confirm the changes. If any participant cannot complete the operation, a rollback is initiated to undo the changes. 2PC ensures strong consistency, but it can block resources for long periods, which is problematic in distributed systems requiring high availability and scalability.

How Does the SAGA Pattern Work?

The SAGA pattern offers an alternative to 2PC by dividing a transaction into more manageable subtransactions, each with compensating actions for cases where a step fails. There are two main types of SAGA: Orchestration and Choreography, depending on whether the flow is centrally controlled or autonomously managed by each service.

Why Adopt the SAGA Pattern?

The main advantages of the SAGA pattern are:

- Robustness: Improves the system’s ability to recover from failures, allowing for efficient compensations.

- Scalability: Resources don’t need to be locked, allowing for greater scalability in independent microservices.

- Decoupling: Enables services to operate autonomously, facilitating system evolution.

How to Implement the SAGA Pattern?

To implement Sagas:

- Identify the microservices: Define the services that participate in the transaction.

- Define subtransactions and compensations: Ensure each service has a compensating action.

- Orchestration or Choreography: Choose the appropriate approach based on complexity.

- Use frameworks: Tools can facilitate SAGA implementation.

Tools for Implementing the SAGA Pattern

There are many tools that simplify the implementation of Sagas in distributed architectures:

Libraries and Frameworks

- Spring Boot

- Spring Cloud Stream: Facilitates microservice integration with a choreography approach.

- Spring Cloud Kafka Streams: Useful when using Kafka as a messaging system for Sagas.

- Eventuate Tram: Provides a solid implementation of the SAGA pattern, supporting both orchestration and choreography.

- Axon Framework: Known for Event Sourcing and CQRS, it is also ideal for implementing Sagas. Its event-based approach allows for smooth integration in distributed architectures.

- Camunda: A process automation platform that manages workflows with BPMN, ideal for orchestration in Sagas.

- Apache Camel: Offers a Saga component that facilitates the coordination of distributed microservices through messaging.

Cloud Services

- AWS

- AWS Step Functions: Ideal for distributed workflows, allowing the implementation of Sagas via orchestration.

- Amazon SWF: Provides more control over the state of distributed transactions.

- Azure

- Azure Durable Functions: Allows writing stateful workflows in a serverless environment, ideal for Sagas.

- Azure Logic Apps: Provides a visual interface for designing and automating workflows.

- Google Cloud

- Google Cloud Workflows: Allows for orchestrating services and APIs to implement Sagas.

- Google Cloud Composer: Based on Apache Airflow, ideal for complex workflows.

Other Alternatives

- MicroProfile LRA: Manages long-running transactions in microservices.

- Temporal.io: Open-source platform for durable workflows.

- NServiceBus Sagas: Part of the NServiceBus platform, specialized for .NET.

Considerations for Choosing Tools

When selecting a tool to implement Sagas, consider factors such as:

- Technology stack: Choose tools compatible with the technology already in use.

- Complexity: Tools like Axon Framework or Temporal.io are designed for more complex flows.

- Infrastructure: Cloud options like AWS Step Functions offer managed solutions, while tools like Camunda provide more control on local servers.

- Learning curve: Some platforms require more time to master but offer greater flexibility and control.

When Should You Use the SAGA Pattern?

Deciding when to use the SAGA pattern depends on the specific characteristics of the distributed system and the transaction requirements. SAGA is ideal for managing complex distributed transactions and ensuring availability and scalability without locking the resources of the involved microservices. Below are scenarios where the SAGA pattern is the best option and those where other alternatives may be more appropriate.

When to Use the SAGA Pattern

- Long-running distributed transactions: In systems where transactions may span multiple services and must not lock resources for a long period, SAGA is essential. By dividing transactions into independent steps, resource blocking across multiple services is avoided, improving performance and scalability.

- High fault tolerance: In environments where robustness is crucial, the SAGA pattern allows compensating actions in case of failures, ensuring the system quickly recovers without affecting global consistency.

- Decoupled systems: In microservices architectures where services need to operate autonomously, choreography within the SAGA pattern allows services to interact via events, facilitating independent evolution.

- Scalability: For systems requiring high scalability, the SAGA pattern is excellent, as it doesn’t rely on global transactions that block services, allowing each microservice to process its transactions independently.

- Complex business processes: In scenarios where business rules span multiple services and require detailed control over transaction flow, the orchestration approach in SAGA provides a centralized view and facilitates error handling.

When Not to Use the SAGA Pattern

- Short, low-risk transactions: If transactions are simple and don’t span multiple services, using SAGA may add unnecessary complexity. In such cases, it might be preferable to use a simpler approach, such as 2PC, which guarantees strong consistency without needing compensating actions.

- Strict strong consistency requirements: If the system must ensure that all services involved in a transaction maintain a consistent state at all times, 2PC might be more appropriate. This is more common in systems where availability is not the main requirement and temporary resource blocking can be tolerated to ensure consistency.

- Environments with low tolerance for transient failures: In some systems, intermittent or transient failures may be less frequent, and the need for a system that implements compensations might be overkill. In these cases, traditional transaction management may suffice.

Conclusion

The SAGA pattern is a powerful tool for managing distributed transactions in microservices architectures, providing robustness, scalability, and flexibility in environments where availability is critical. However, like any design pattern, its application depends on context. Using SAGA in scenarios where transactions are complex and distributed will improve fault recovery and overall system performance.

On the other hand, in environments where strong consistency is essential or transactions are simple and short-lived, traditional solutions like Two-Phase Commit (2PC) might be more appropriate. The key is to carefully evaluate the specific needs of the system before choosing the transaction management strategy that best suits the business and technical requirements